Project Overview

Guided Rest is an AI-powered meditation app that generates custom guided meditations using large language models (LLMs) and text-to-speech technology. Users can receive personalized meditation sessions tailored to their needs, delivered through both web and mobile platforms.

My Contributions: I designed the complete visual identity and user experience, implemented the web application (including Stripe payment integration and LLM/TTS pipelines), and built the entire iOS app from scratch as the sole iOS developer.

Development Timeline: 6-month collaborative project with 2 developer friends. We launched the web MVP in 1 month, then iteratively improved the web app while I simultaneously developed the iOS application.

Team Structure:

- Myself: Design lead, web implementation (payments, LLM/TTS integration), solo iOS developer, DevOps (GCP setup and monitoring)

- 2 Developer Friends: Backend infrastructure and additional web features

Design Process

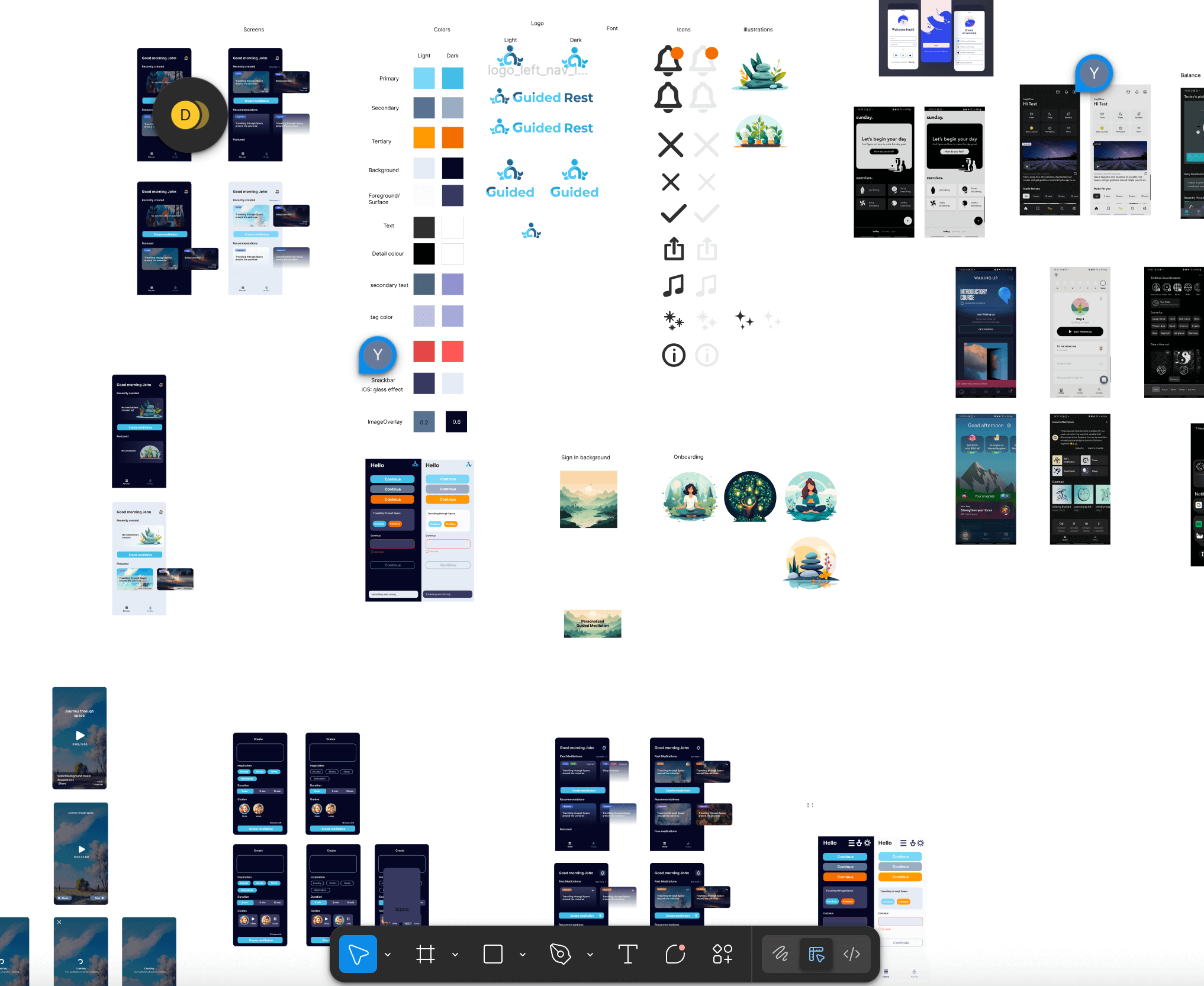

Brand & Visual Identity

I designed the complete visual identity for Guided Rest from the ground up, including the logo, color palette, and design system used across both web and mobile platforms. The process was simple given the team size, create mockup, present to team and improve based on feedback.

Technical Architecture

Web Platform - Next.js & Serverless

The web application is built with Next.js and deployed using Google Cloud Platform (GCP) Cloud Run for a fully serverless architecture.

Key Technologies:

- Next.js: React framework for the web interface

- TypeScript: Type-safe development

- GCP Cloud Run: Serverless functions for scalable backend

- Serverless Architecture: Auto-scaling, pay-per-use infrastructure

AI-Powered Meditation Generation

The core innovation of Guided Rest is its ability to generate personalized meditations on demand.

LLM Integration:

- Connected to LLM API for meditation script generation

- Custom logic layer processes user preferences and context

- Dynamically generates meditation scripts based on:

- User mood and needs

- Session length preferences

- Meditation style (mindfulness, sleep, stress relief, etc.)

- User history and feedback

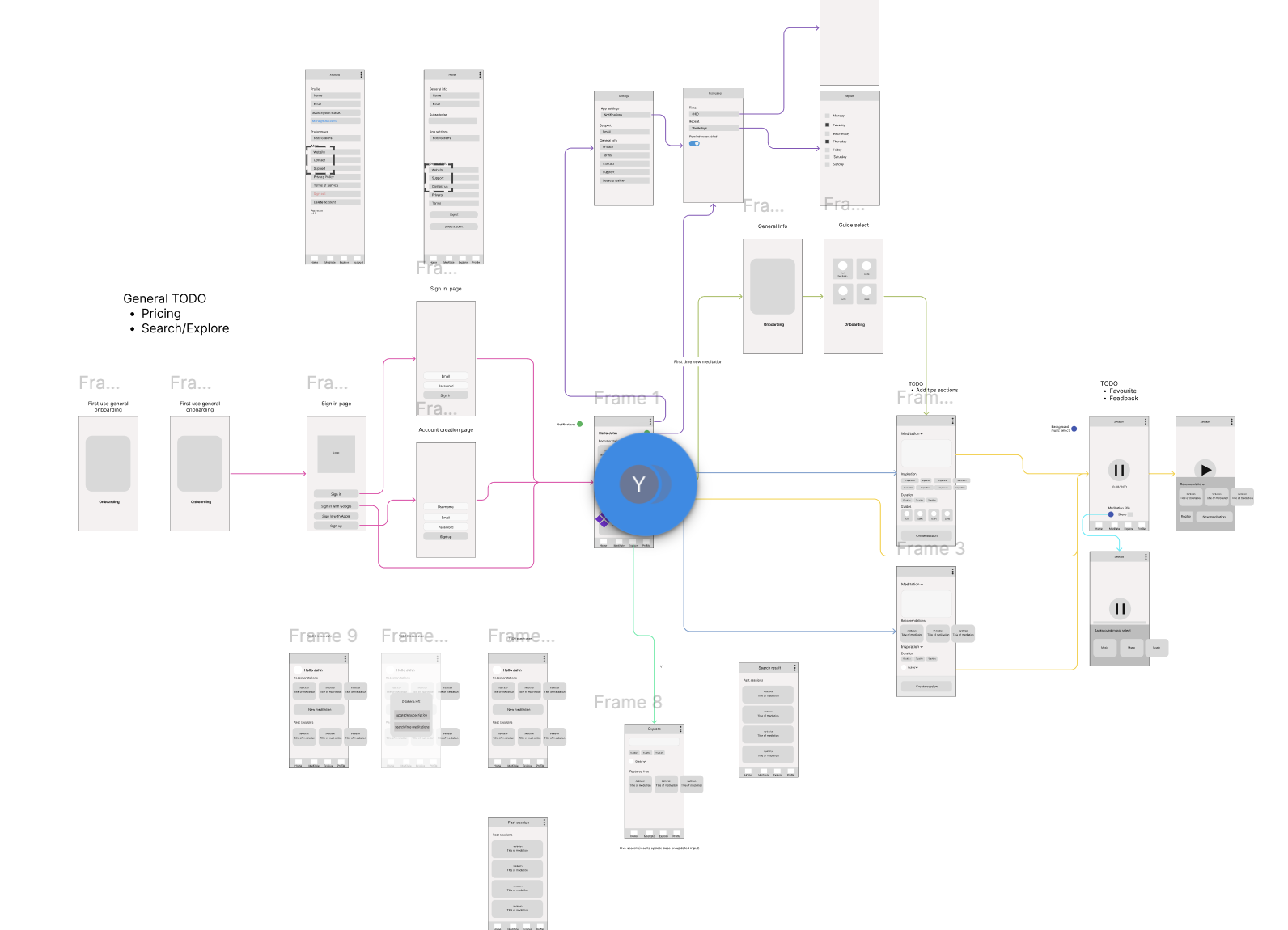

Wireframing & UX

After the web application was created we started to create the wireframes to map out user flows and ensure a seamless experience for the app (which should be the main entry point). The wireframes guided the development process and helped align the team on the user experience vision.

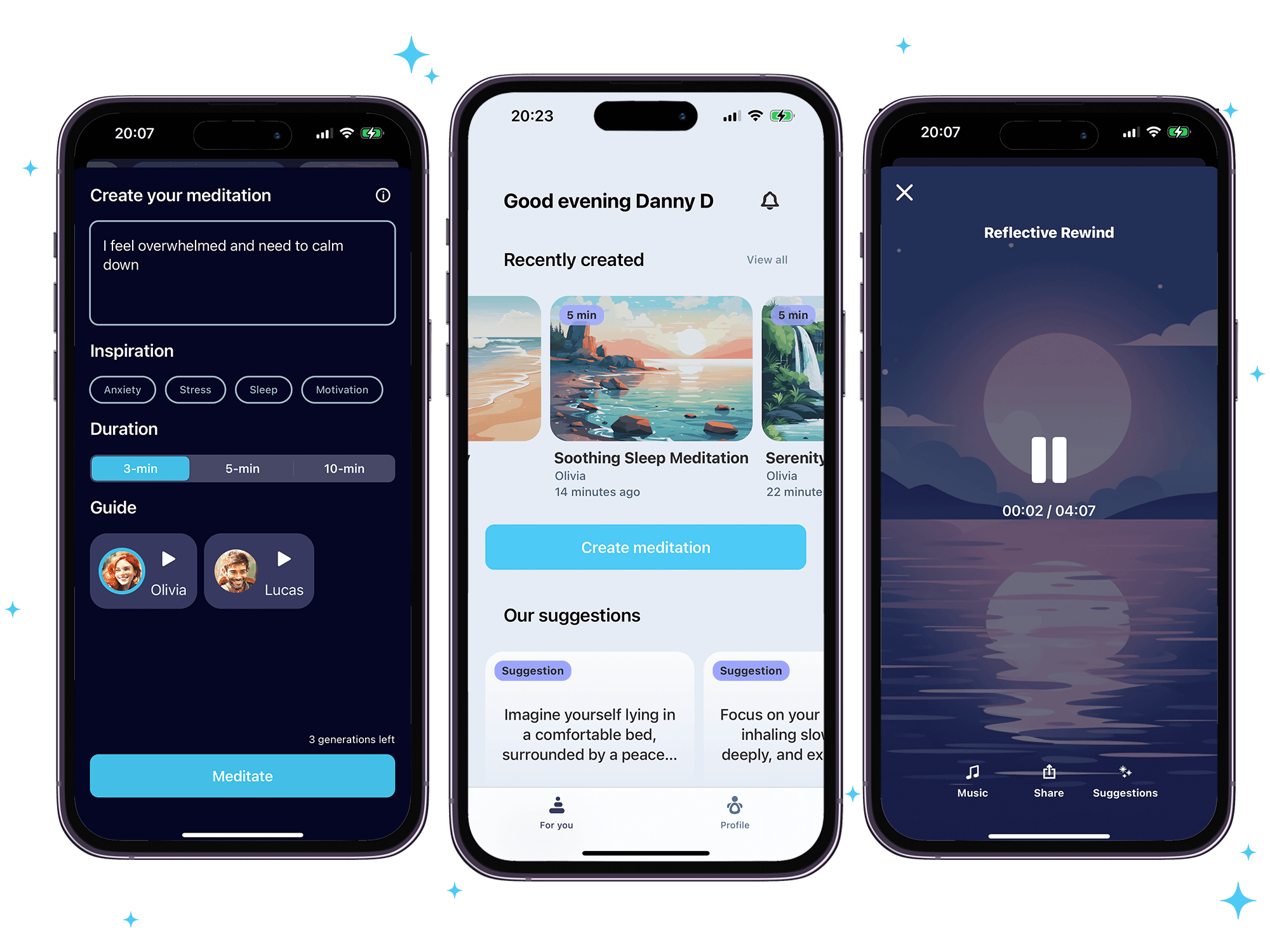

iOS Application

Solo Development

I designed and developed the entire iOS application from scratch as the only iOS developer on the project.

Technologies:

- Swift: Native iOS development

- SwiftUI: Modern declarative UI framework

- Combine: Handle asynchronous events

- AVFoundation: Audio playback and controls

- URLSession: API communication with backend

iOS app demonstration showcasing the user interface and meditation playback

Technical Challenges & Solutions

Challenge 1: LLM Response Consistency

Problem: LLM responses could vary in format and quality, making it difficult to reliably generate meditation scripts.

Solution:

- Designed a robust prompt engineering system with strict templates

- Implemented response validation and retry logic

- Added custom processing layer to normalize and enhance outputs

- Built a feedback loop to improve prompts over time

Challenge 2: Real-Time Audio Generation Latency

Problem: Users had to wait for TTS generation before starting meditations.

Solution:

- Added streaming audio generation

- Optimized GCP Cloud Run cold starts

- Smart and seamless audio stitching

What I Learned

Working with LLMs

- Prompt engineering techniques for consistent outputs

- Managing API costs and rate limits

- Handling LLM response variability

- Building reliable systems on top of probabilistic models

Text-to-Speech Technology

- TTS model selection and evaluation

- Audio quality optimization

- Managing generated audio assets

- Real-time audio processing and streaming

Serverless Architecture

- GCP Cloud Run deployment and configuration

- Cold start optimization strategies

- Serverless cost management

- Scaling considerations for AI workloads

iOS Development

- Gained hands-on experience with SwiftUI and modern iOS patterns

- Implemented background audio playback with proper state management

Full-Stack Design

- End-to-end product design (brand → wireframes → implementation)

- Cross-platform design consistency

- Designing for AI-powered experiences

- Balancing technical constraints with UX goals

Tech Stack Summary

| Category | Technologies |

|---|---|

| Design | Figma |

| Web Frontend | Next.js, React, TypeScript |

| Backend, Deployment | GCP |

| AI/ML | ChatGPT API, TTS Models |

| Payments | Stripe |

| iOS | Swift, SwiftUI, AVFoundation |

| Android | Kotlin, Compose |

Project Status

Note: This project is no longer live. The demos and TestFlight applications are available upon request.

Complete application showcase across web and mobile platforms

This project showcases my abilities in full-stack development, native iOS development, AI/ML integration, product design, and building and maintaining scalable web applications.